Solution: AI Chatbot

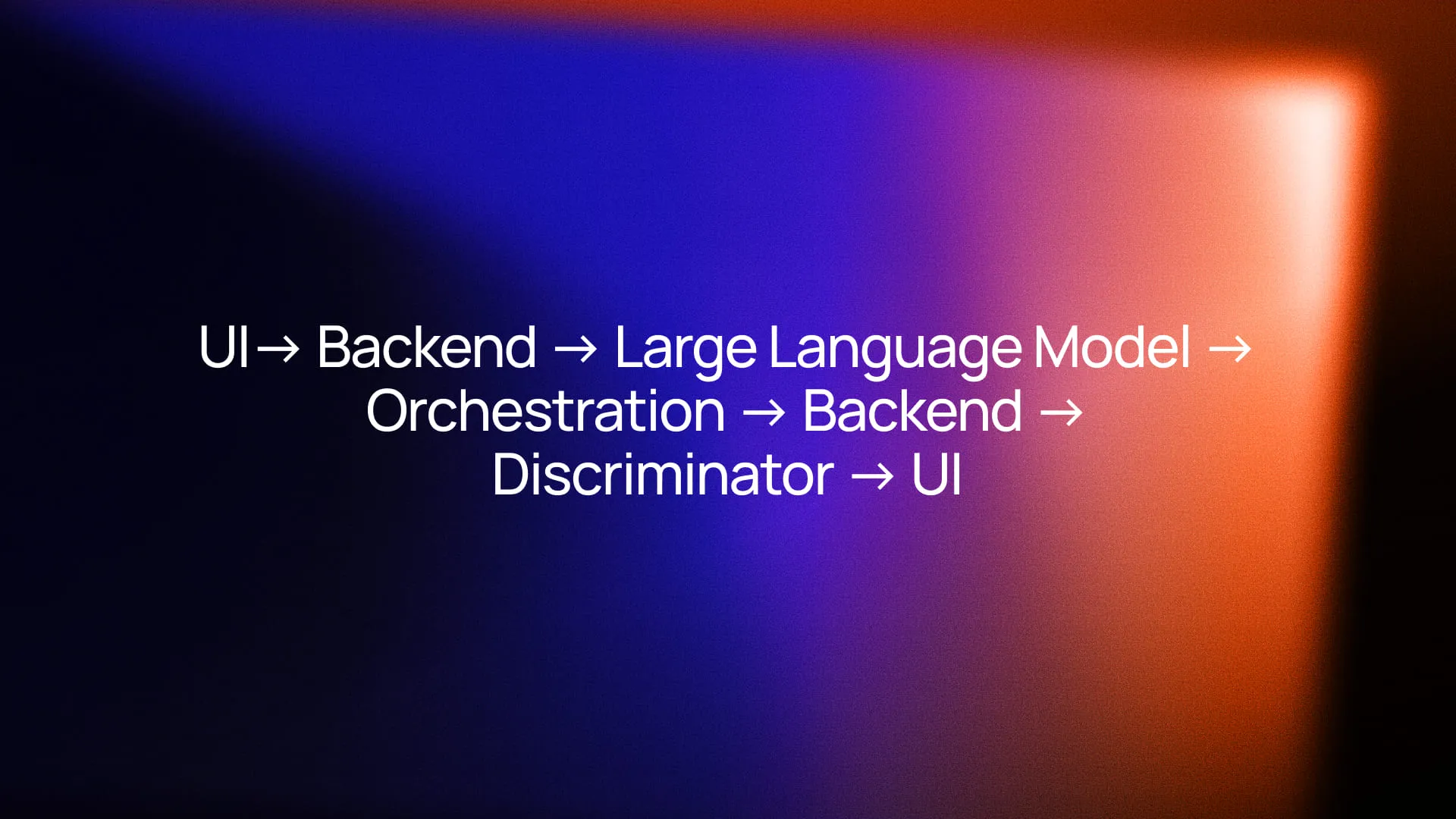

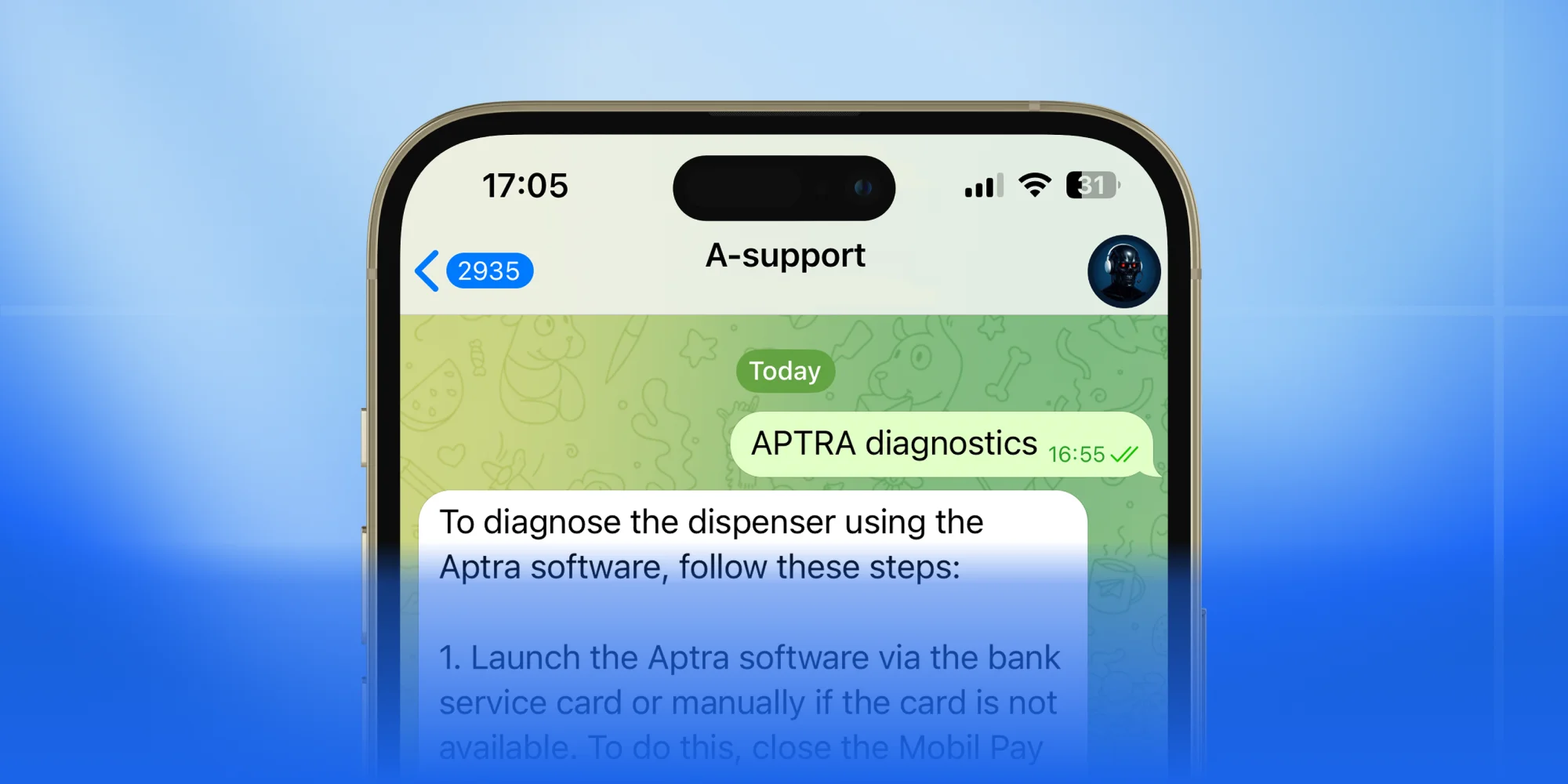

To address the tasks set before us, together with the client, we decided to develop a chatbot based on artificial intelligence. This is a chatbot capable of communicating with users in natural human language. Through interaction with various APIs, the bot always knows stock availability and can provide all the necessary information about the products.