Customer and His Platform

Bhakti Vikasa Swami is one of the leading gurus of the organization, personally trained by Prabhupada, the founder of the faith. The preacher constantly delivers lectures in various countries and actively manages his YouTube channel: currently, it has over 120 thousand subscribers, with more than 2500 videos uploaded.

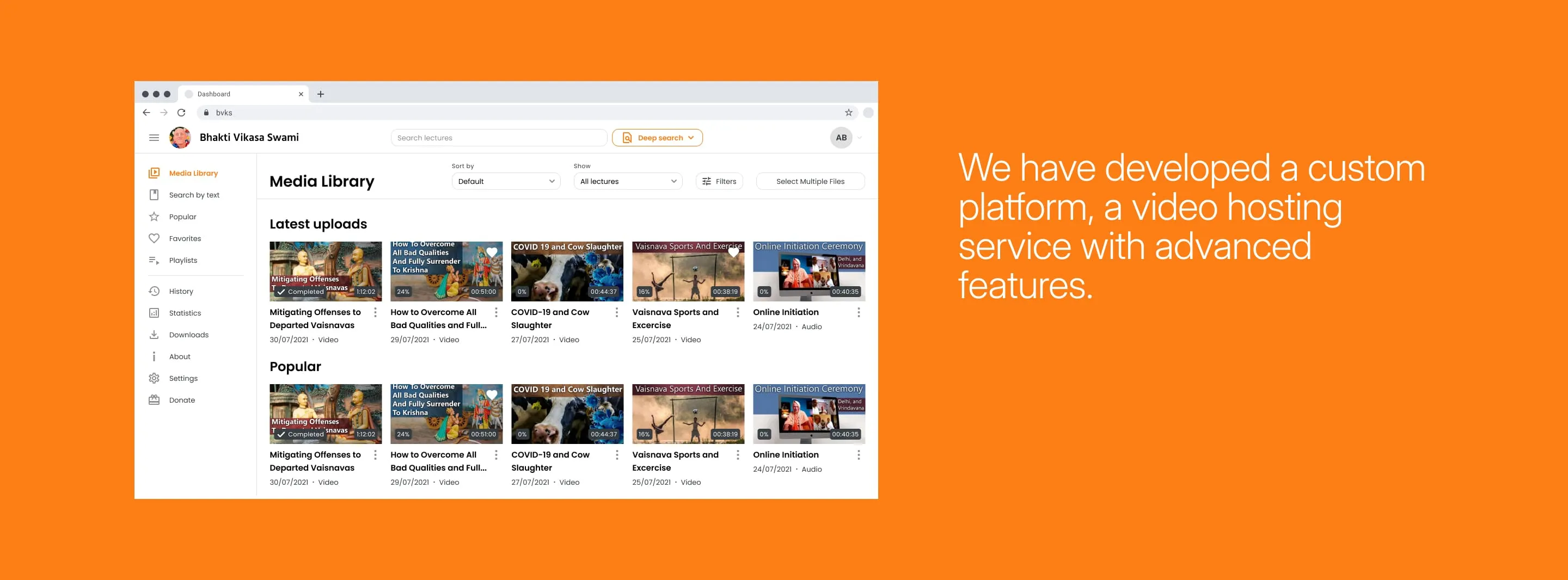

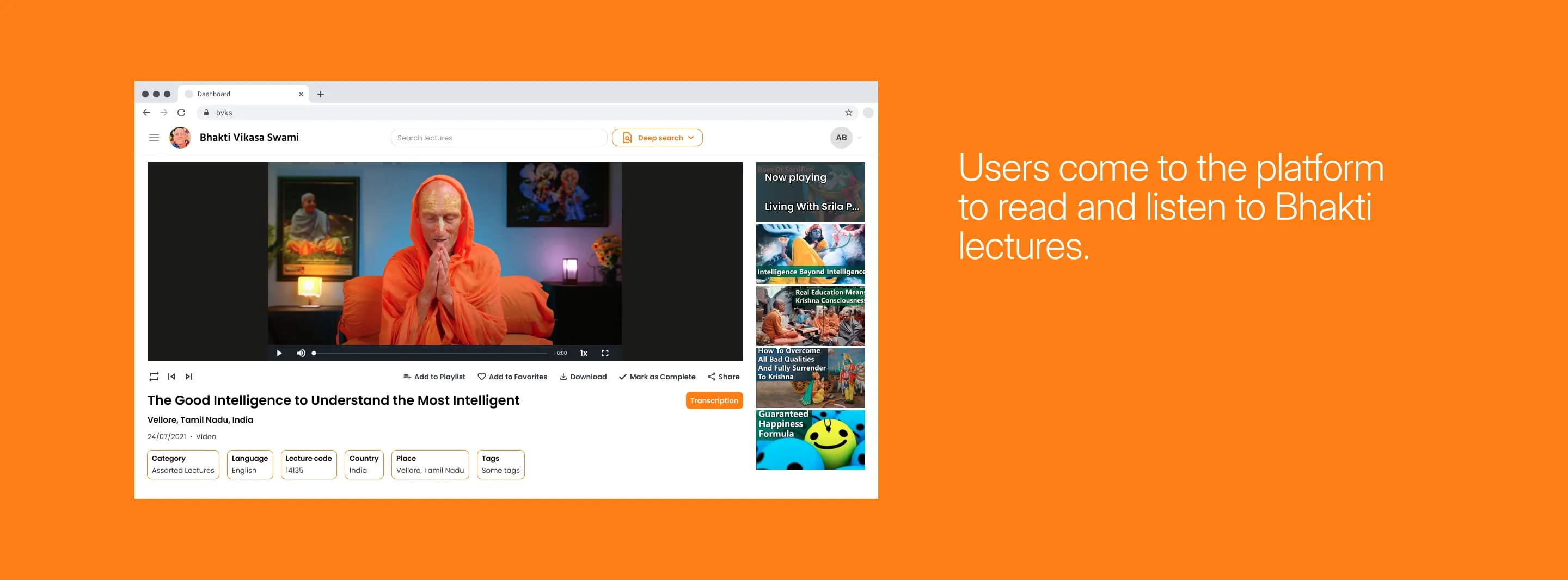

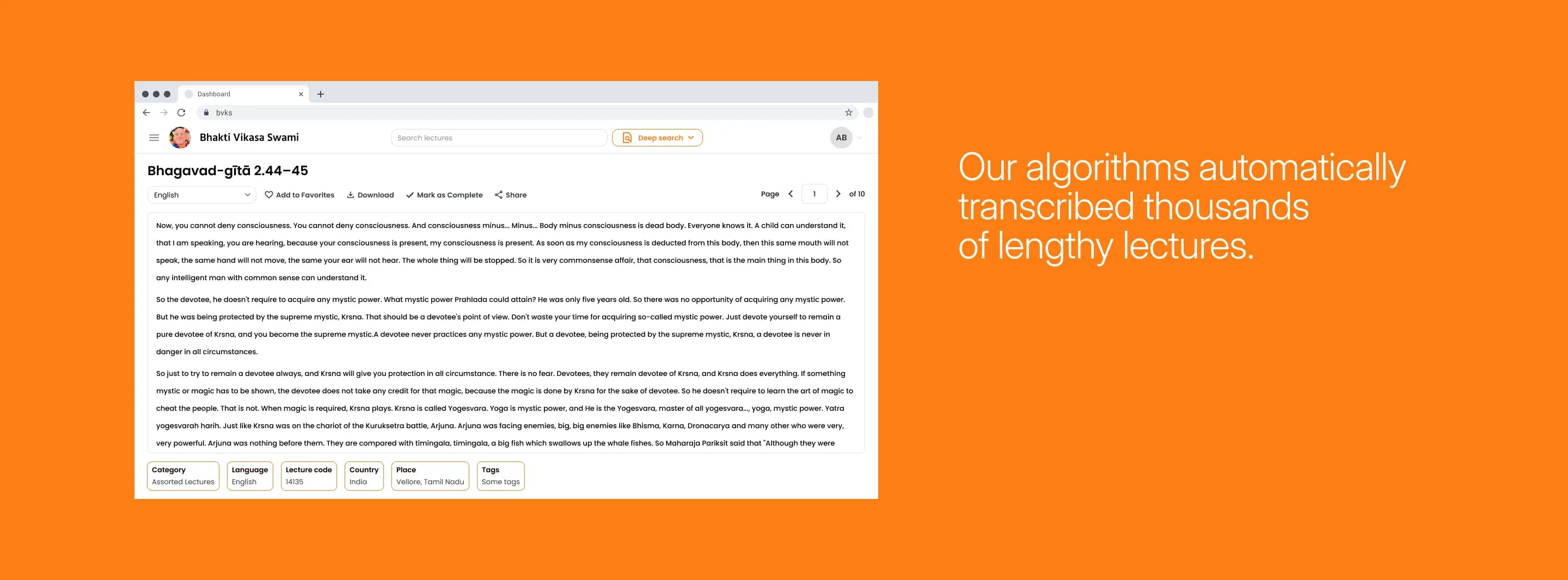

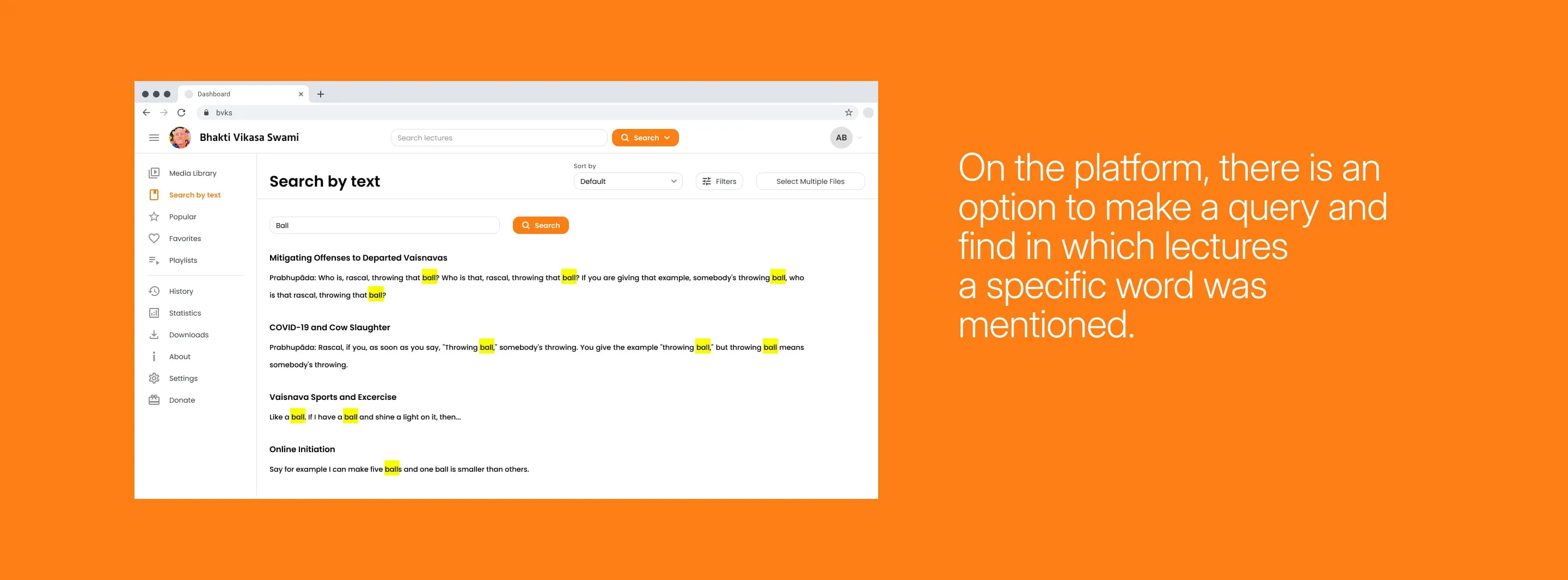

Two years ago, we developed a separate website for Bhakti and his lectures, a mini-YouTube for followers and disciples. We developed everything on Firebase, a large Google database that allows web services and applications to work without a backend. Lectures in video and audio formats are published on YouTube and the proprietary platform in large numbers.