Project Start

Today, we will share how we helped the followers of the guru gain access to content in various formats. This is the story of developing a custom video hosting platform and automating the transcription of lectures.

Our customer, Bhakti Vikasa Swami, is one of the leading gurus of ISKCON and personally studied under Prabhupada, the founder of the organization. He regularly gives lectures in different countries and actively runs his YouTube channel.

Customer’s YouTube Channel

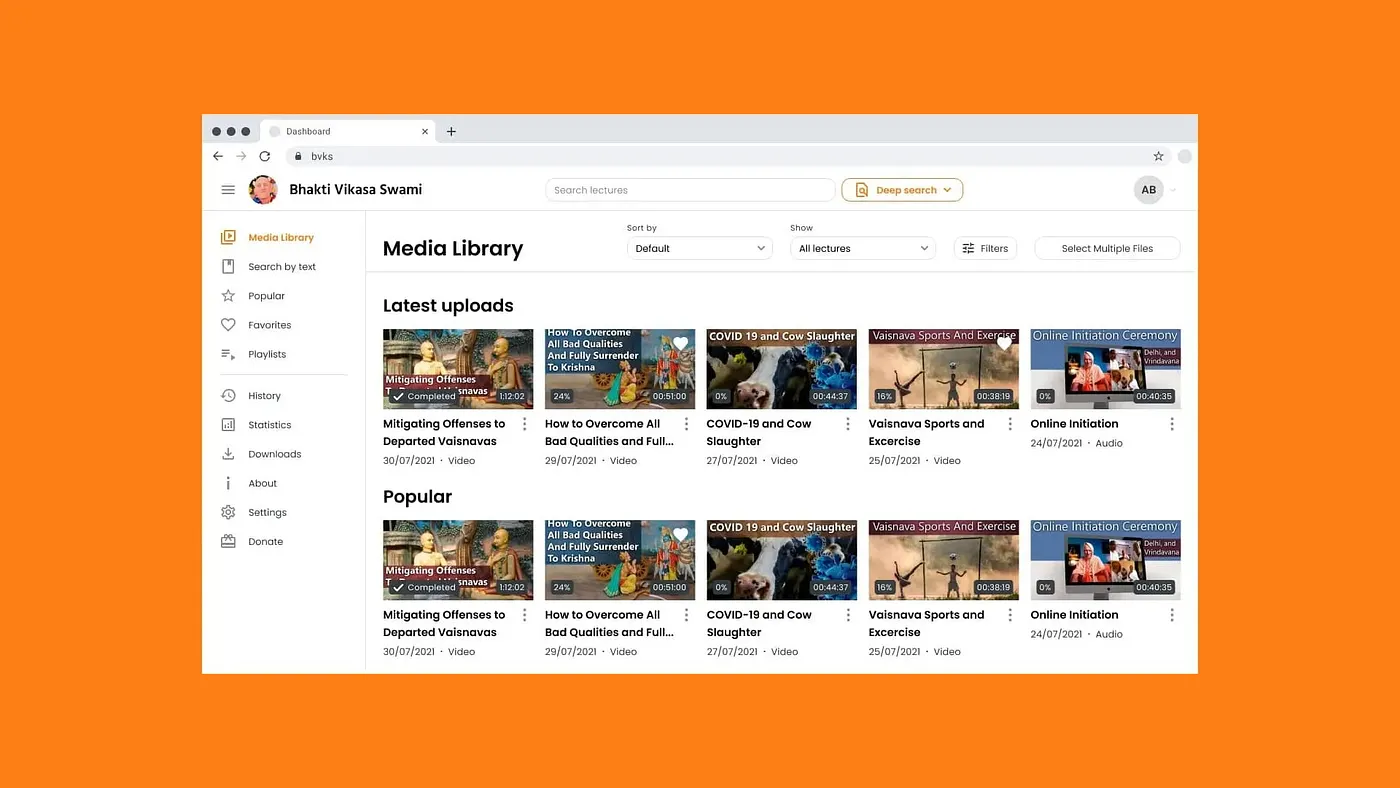

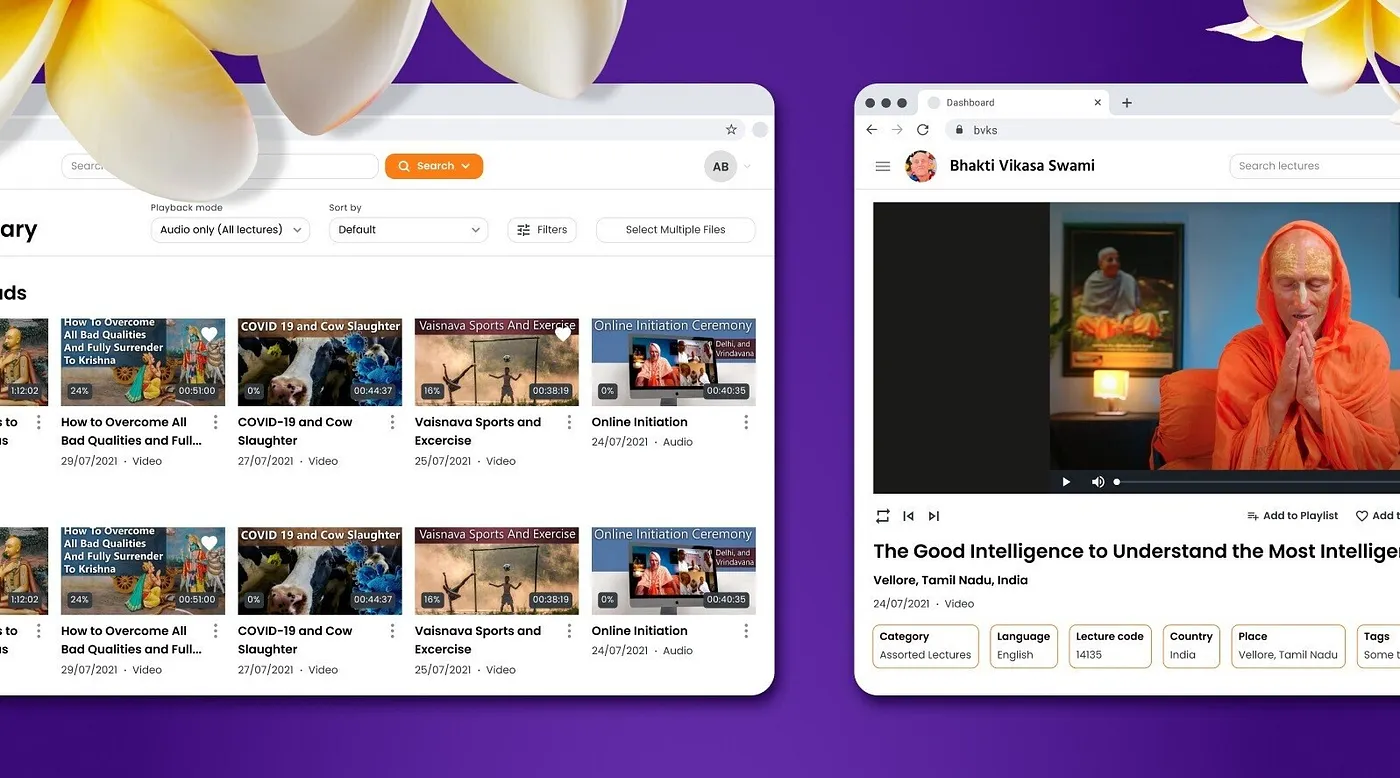

Two years ago, we at Unistory developed a dedicated website for Bhakti Vikasa Swami and his lectures — a mini YouTube for his followers and disciples. We built a video platform for our client using Firebase, a large database service that allows web services and applications to operate without a backend.

Lectures in both video and audio formats are released in large quantities on YouTube and the custom platform. Currently, there are over 120,000 subscribers, and more than 2,500 videos have been uploaded.

We developed a new platform, a video hosting service with enhanced features

Automating Video Transcription

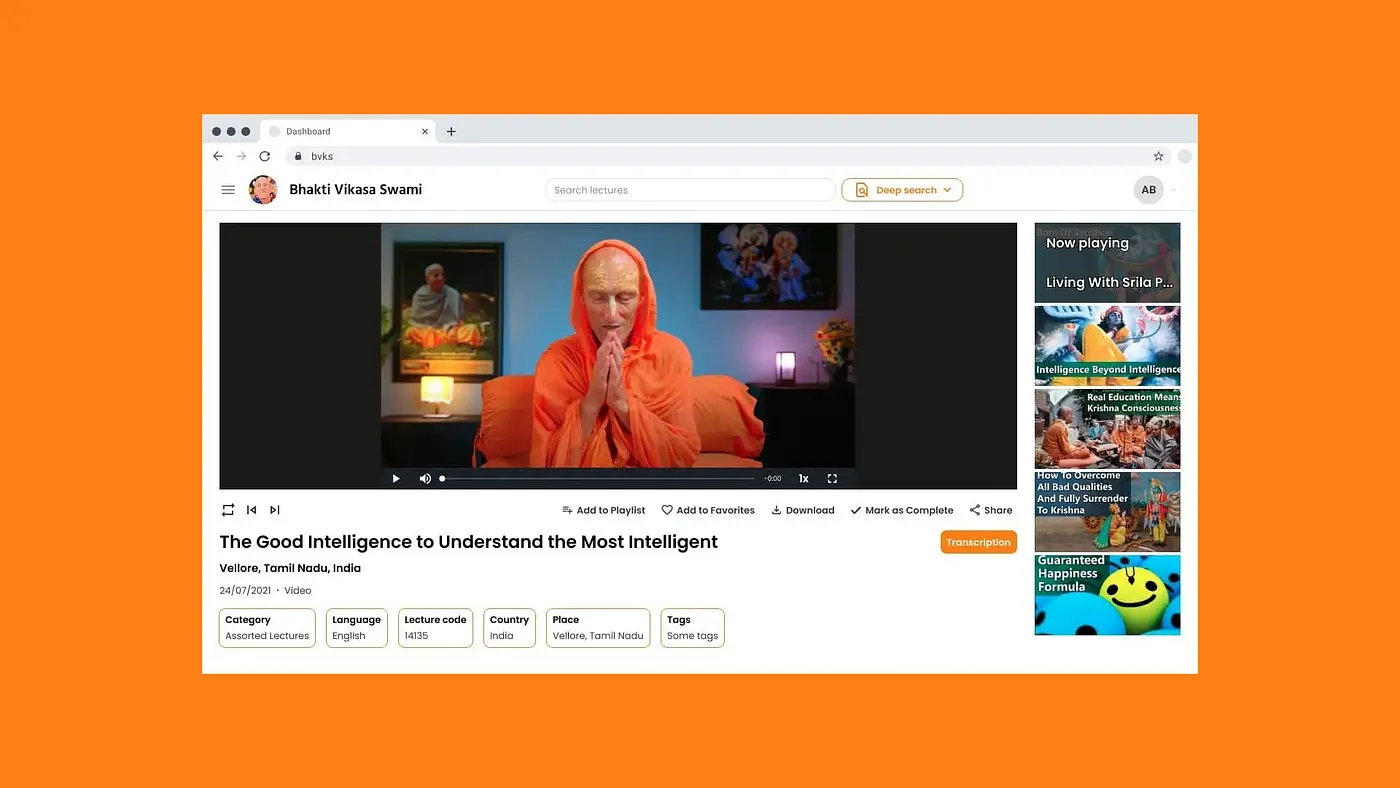

Two years after developing the platform, the client returned to us with a new idea: to publish transcriptions of the video lectures on the site. It turned out that a significant portion of Bhakti’s audience prefers the text format. However, manually transcribing hundreds or thousands of lectures is an overwhelming task. Our challenge was to automate this process.

How do we do it? If the word “neural networks” came to mind, congratulations, you are right! In the following case study, we will detail how we automated the conversion from video to text, the nuances we encountered, and why our technology is better than any transcription service.

Users come to the platform to read, listen to, and watch Bhakti’s lectures

Integration with Elasticsearch

Another idea from the client was to help users search for information more accurately on the platform.

A typical use case: a follower visits the YouTube channel to find out what their spiritual teacher thinks about relationships in a married couple.

The search returns videos, but not all of them are relevant to the query: some may be about relationships with guru or friends, while others discuss the relationship with God.

An additional issue is that even if the user finds the right video, it may last two or three hours and contain many thoughts on various topics. Together with the client, we decided to assist Bhakti’s followers in finding answers to their questions.

We created algorithms that automatically transcribe thousands of hours of lectures

Whisper AI and ChatGPT

To transcribe the videos, we decided to use the specialized neural network Whisper AI. While the artificial intelligence performs well in transcription tasks, the final text is often not polished enough.

The material still requires manual editing, but in our case, due to the vast number of videos, this was not feasible. To produce high-quality cleaned-up transcripts manually, we would have needed to assign several dozen employees to work for a month or more.

To edit the text after transcription, we implemented an algorithm that runs the transcription through ChatGPT. The result is a higher-quality lecture transcript that is stylistically polished and free of errors.

The script processed the lectures over several months. Yes, this is a lengthy process, but it is a thousand times faster and cheaper than doing it manually.

Moderation Feature

Even with editing through ChatGPT, there remains a chance of stylistic and factual errors. We decided to give users the ability to report these errors.

Visitors to the platform can report any mistakes to the administrator, who then corrects the text or dismisses the report. We are currently finishing the technical implementation of this feature.

Bhakti Vikasha Swami

Word Search Within Videos

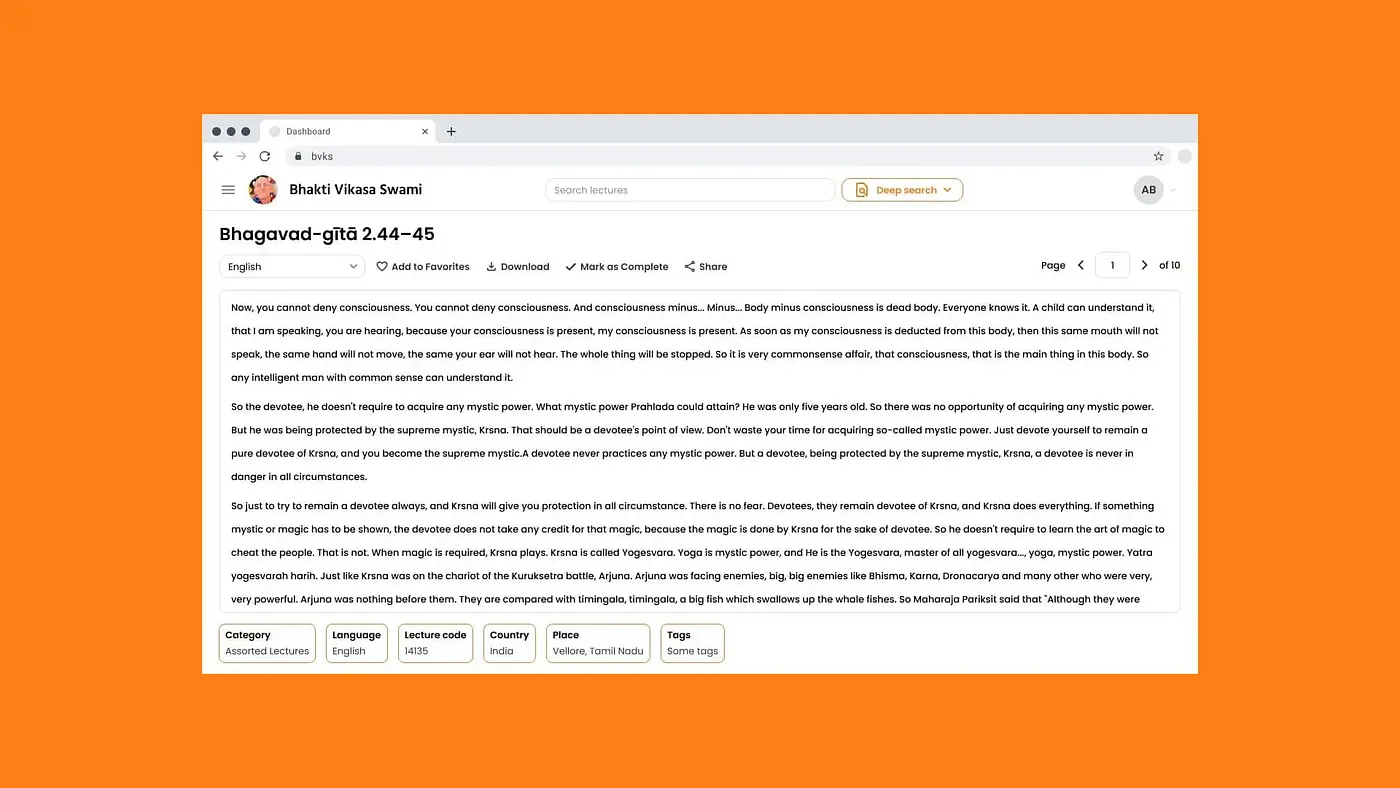

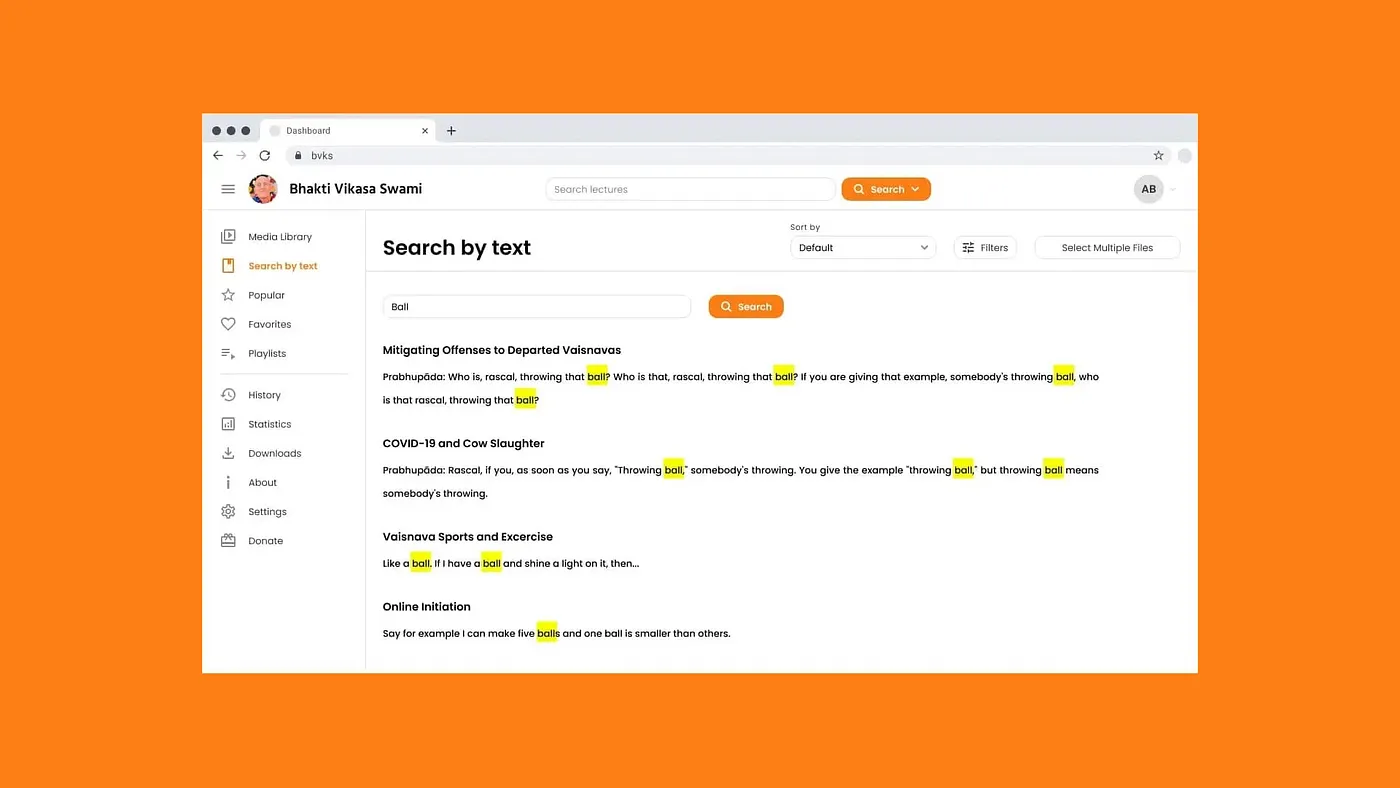

We transformed audio and video into text, but we faced another challenge: helping users find specific words and their contex within the lectures.

To address this, we chose Elasticsearch — an excellent tool for searching data in vast datasets.

Since Elasticsearch cannot search for words in audio or video, the search on the platform is conducted using the transcriptions of the lectures that we automated in the previous phase. Each transcription is linked to its corresponding video/audio version, allowing Elasticsearch to determine how many times a particular word is mentioned in a specific lecture.

In terms of the user interface (UI), we divided the search into two options: standard search and Deepsearch, which allows for searching within lectures. Users can choose to search for a lecture by title or by specific words mentioned in it.

We implemented the ability to search for specific words within all videos uploaded to the platform

Results and Plans

The client had two objectives, and we successfully addressed both by leveraging AI-generated transcriptions. Users of the platform can now read the preacher’s lectures and precisely search for specific videos based on the content rather than just the titles.

In the near future, we plan to enhance the Deepsearch feature by displaying the exact timestamps when Bhakti mentioned the searched word in the video. These timestamps will also be pulled from the text version.

Even More Interesting Projects

If you’re interested in unconventional development approaches and unique projects, check out our portfolio. Here, we share how we take on ideas that seem impossible at first and turn them into reality. Proof of concept in one day, a working project in three months!